PHD Course: Eye tracking in Desktop, Natural and Virtual Environments (13 – 15 February 2018 | Monash University, Melbourne, Australia)

-

Enquiries: Harmen.Oppewal@monash.edu or: Meissner@sam.sdu.dk

What other participants say about our course:

“An interesting, insightful and enjoyable course on eye tracking!”

“I like that we got a very good overview of different relevant topics. I also find it very useful to present briefly our projects and received a lot of useful feedback.”

“In one week I improved to a degree of knowledge about eye tracking that I could hardly reach by myself in one or two months.”

“I really enjoyed the time in the course and the social events! It was a good idea to invite people from different disciplines.”

“Learned a lot, also about technological cutting-edge aspects of eye tracking from very nice people.”

Purpose and content:

Description:

Much of the rapid growth of research on attention and especially eye tracking has been driven by the fast technological development in recent years and a sharp decline in the costs of eye tracking equipment. Remote, head-mounted, portable and mobile devices are increasingly used because it is getting easier to generate larger samples of respondents in new, unexplored environments. Eye tracking makes it possible to track and study attentional processes in great detail, classically in front of computer screens, but also in mobile contexts, for example, when using digital devices, like smartphones or smartglasses (Google glasses, Microsoft HoloLens, Tobii glasses).

The qualifications and skills obtained during their preparatory study programs typically do not sufficiently prepare applied behavioral researchers in business to effectively use eye tracking devices, avoid potential pitfalls and analyze more complex eye tracking datasets. This course aims to provide PhD students and other researchers new to the field of eye tracking a basic introduction into eye tracking technology that will allow them to use eye tracking in their research projects. Participants will gain an overview of research in the field of bottom-up and top-down attentional processes. We will also discuss latest developments in the field of eye tracking, including how to apply eye tracking in virtual reality environments. From a practical perspective participants will obtain insight how to set up eye tracking experiments. They will also record eye tracking data and learn how to analyze the data. Participants will have the opportunity to use remote and mobile eye tracking and use the provided software to analyze their datasets. Based on their course experience, participants will be able to critically reflect on their experimental work and improve the planning of their own future empirical studies.

Course Content:

The course will cover the following topics:

- Eye tracking basics

- Bottom-up and top-down processes of visual attention

- Eye tracking measures and their meaning (pupil dilation, fixation duration, eye blinks, saccadic distances)

- Handling and management of eye tracking data

- Mobile eye tracking equipment and annotation of fixations

- Use of mobile eye tracking in virtual and augmented reality

- OpenSource eye tracking software

- Analysis of eye tracking data

- Hands-on mobile eye tracking equipment: Track a short sequence with mobile eye tracking equipment

- Individual feedback by course instructors on submitted research proposals or postersFormat:

The course has a lecture/discussion format and a hands-on experimental component. The interactive lectures will focus on the theoretical background of visual attention. In a hands-on practical exercise participants will record eye movements in a lab setting. Participants can then use provided open source software for analyzing the data as well as other (open source) statistical software package of their choice. Finally, they will present first results and discuss their learnings in class. We will also bring mobile eye tracking equipment to the class so that participants can trial and become familiar with new mobile eye tracking technologies, existing open source software and receive instruction into the do’s and don’ts regarding the various devices.

Learning objectives:

After completing the course, participants will have:

- an understanding of problems associated with eye tracking studies using different sorts of equipment

- an understanding of the data generating process

- an ability to assess the potentials and limits of using eye tracking in their own empirical research

- an ability to independently design and analyze eye tracking experiments and circumvent pitfalls typically encountered when using eye tracking technology

- an understanding of the various ways in which eye tracking data can be analyzed

- an understanding of state-of-the-art theories of visual attentionPrerequisites:

This course is targeted at PhD students as well as at more experienced researchers who are new to the field of eye tracking and who intend to use eye tracking in their research projects. Projects can be based in business studies, psychology, experimental economics, information systems or any other social science. Basic knowledge (masters level) of statistics as well as familiarity with statistical software packages such as SPSS, SAS, Stata, R or other programs is desirable but is not a requirement.

Application deadline: November 20, 2017

Fee: None

Evaluation:

Certificates of completion will be issued based on class attendance and participation and the submitted posters.

Application and selection procedure:

All applicants are required to submit a poster and a motivational statement and short bio or cv to Martin Meißner (Meissner@sam.sdu.dk) no later than November 20, 2017.

- The poster (max. DIN A1 format) outlines the intended use or application of eye tracking in the applicant’s study project and so should include: (1) (preliminary) research question(s); (2) a description of the data or data collection process; (3) a description of the (planned) experiments/studies; (4) empirical results (if available) and (5) key references. During the course every participant will be asked to give a five minute introduction to his or her poster.

- The motivational letter (max 1 page A4) should explain the applicant’s main interest and motivation for wanting to participate in the course.

- The individual bio or cv (max 1 page A4) should list the applicant’s prior qualifications and key research achievements and the current study or degree program undertaken and/or academic or research position held currently.Please note (important!):

- The course can accommodate only a small number of attendees. This is due to equipment availability but also to ensure the instructors are able to work closely with all participants and provide individual recommendations and feedback.

- Applicants will be selected based on their submitted posters and project proposals and on the basis of their submitted motivation letter. Places are limited.- Participants must make their own travel and accommodation arrangements and reservations.

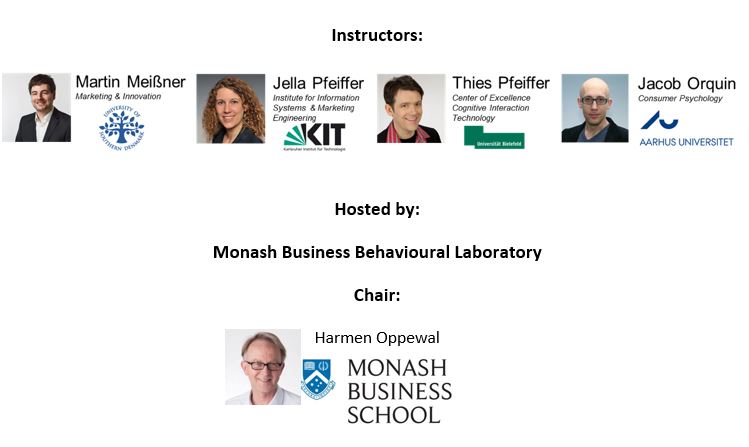

Instructor bio’s

Martin Meißner is Associate Professor at the Department of Sociology, Environmental and Business Economics at the University of Southern Denmark (Denmark) and an adjunct senior lecturer at the Department of Marketing at Monash University (Australia). He holds a PhD in Marketing from Bielefeld University. His research focuses on the analysis and modeling of eye-tracking data, the analysis of brand images, as well as on the development of preference measurement methods. His work has been published, among others, in the Journal of Marketing Research, the International Journal of Innovation Management, and the Proceedings of the International Conference on Information Systems.Jella Pfeiffer is post-doc and “Privatdozent” (PD) at the Institute of Information Systems and Marketing at the Karlsruhe Institute of Technology (KIT). At KIT she also is manager of the Karlsruhe Decision & Design Laboratory (KD2Lab). She received her PhD in Information Systems and her Venia Legendi (“Habilitation”) in Business Administration from the University of Mainz. Her research interests include decision support systems in ecommerce and m-commerce, consumer decision making, Neuro Information Systems (in particular eye-tracking), human-computer-interaction and experimental research in the laboratory, the field and the virtual reality. Her work has been published, among others, in the Journal of the Association for Information Systems, the European Journal of Operational Research and the Journal of Behavioral Decision Making.

Thies Pfeiffer is senior researcher at the Center of Excellence Cognitive Interaction Technology at Bielefeld University, were he is technical director of the virtual reality lab. He holds a doctoral degree in informatics (Dr. rer. nat.) with a specialization in human-machine interaction. His research interests include human-machine interaction with a strong focus on gaze and gesture, augmented and virtual reality, as well as immersive simulations for prototyping. He has organized several scientific events related to the topic of this paper, such as the GI Workshop on Virtual and Augmented Reality 2016, the Workshops on Solutions for Automatic Gaze Analysis (SAGA) in 2013 and 2015, and several others (PETMEI 2014, ISACS 2014). Currently, he is principle investigator in research projects on training in virtual reality (ICSPACE, DFG), augmented reality-based assistance systems (ADAMAAS, BMBF) and prototyping for augmented reality (ProFI, BMBF).

Jacob is an Associate Professor at Department of Management at Aarhus University where he conducts research on eye movements in decision making. Jacob also works on methodological issues in eye tracking such as reporting of eye tracking studies and threats to the validity of eye tracking research.

Harmen Oppewal is Professor and Head of the Department of Marketing at Monash University, Australia. He holds a PhD from the Technical University of Eindhoven in the Netherlands. His research centers on modeling and understanding consumer decision-making behavior in retail and services contexts, in particular assortment perception, channel and destination choice, preference formation, and visualization effects. He has over fifty publications in leading journals including the Journal of Business Research, Journal of Consumer Research, Journal of Marketing Research, and the Journal of Retailing, among others.

Key references:

Meißner, M., Pfeiffer, J., Pfeiffer, T., & Oppewal, H. (2018). Combining virtual reality and mobile eye tracking to provide a naturalistic experimental environment for shopper research. Journal of Business Research, forthcoming.Meißner, M., Musalem, A., & Huber, J. (2016). Eye-Tracking reveals a process of conjoint choice that is quick, efficient and largely free from contextual biases. Journal of Marketing Research, 53 (1), 1-17.

Orquin, J. L., Ashby, N. J. S., & Clarke, A. D. F. (2016). Areas of interest as a signal detection problem in behavioral eye-tracking research. Journal of Behavioral Decision Making, 29(2–3), 103–115. http://doi.org/10.1002/bdm.1867

Orquin, J. L., & Homlqvist, K. (2018). Threats to the validity of eye-movement research in psychology. Behavioral Research Methods, forthcoming.

Orquin, J. L., & Mueller Loose, S. (2013). Attention and choice: A review on eye movements in decision making. Acta Psychologica, 144(1), 190–206. http://doi.org/10.1016/j.actpsy.2013.06.003

Perkovic, S., & Orquin, J. L. (2018). Implicit statistical learning in real world environments behind ecologically rational decision making. Psychological Science, forthcoming.

Pfeiffer, J., Pfeiffer, T., Greif-Winzrieth, A., Meißner, M., Renner, P., & Weinhardt, C. (2017). Adapting human-computer-interaction of attentive smart glasses to the trade-off conflict in purchase decisions: An experiment in a virtual supermarket. In: Schmorrow D., Fidopiastis C. (eds) Augmented Cognition. Neurocognition and Machine Learning. AC 2017. Lecture Notes in Computer Science, vol 10284. Springer, Cham.

Pfeiffer, J., Meißner, M., Prosiegel, J., & Pfeiffer, T. (2014). Classification of goal-directed search and exploratory search using mobile eye tracking. Proceedings of the International Conference on Information Systems (ICIS).

Pfeiffer, T., Renner, P., & Pfeiffer-Leßmann, N. (2016). EyeSee3D 2.0: Model-based real-time analysis of mobile eye tracking in static and dynamic three-dimensional scenes. Proceedings of the ninth biennial ACM symposium on eye tracking research & applications (pp. 189–196).